Hi friends! Have you ever imagined how to run applications independently without having to install any required dependencies on your local machine? We could do that by building and containerizing our applications using Docker Engine. In this post, I’m going to show you step-by-step how I built and containerized Industri Pilar’s backend that runs on Django and PostgreSQL with Docker (a.k.a. dockerize the application).

Wait, what is Docker?

Taken from the Docker Docs, Docker is an open platform for developing, shipping, and running applications. It enables us to separate our applications from our infrastructure because it provides the ability to package and run an application in a loosely isolated environment called a container. The isolation and security allow us to run many containers simultaneously on a given host. Containers are lightweight and contain everything needed to run the application, so we don’t need to rely on what is currently installed on the host. There’s no more problems that arise from different OS developments because everyone has the same container that works in the same way.

How to build the Docker container?

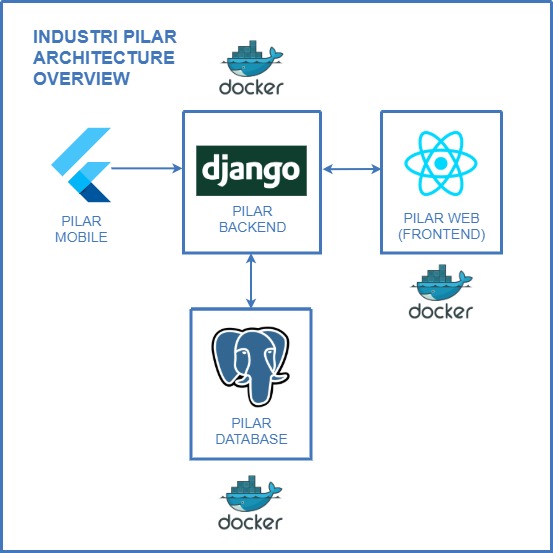

Industri Pilar Architecture Overview

For Industri Pilar’s backend, we use Django Framework with PostgreSQL database to build the REST API. We containerized our application separately from the database, so we need to use Docker Compose to define and run multi-container Docker applications. To build the Docker image, we need to create a Dockerfile to define the commands for our container on our project’s root folder.

Dockerfile

FROM python:3.8-slim-buster

ENV PYTHONUNBUFFERED=1

WORKDIR /code

COPY requirements.txt /code/

RUN pip3 install -r requirements.txt

COPY . /code/

COPY wait-for-it.sh /wait-for-it.sh

RUN chmod +x /wait-for-it.sh

FROM python:3.8-slim-buster: We use Python 3.8 images from Python Docker Official Images to build our image.ENV PYTHONUNBUFFERED=1: We define an environment variable for the Python needs.WORKDIR /code: We create a new directory to put our source code in the container.COPY requirements.txt /code/: We copy the requirements.txt from our local directory to the /code directory in the container.RUN pip3 install -r requirements.txt: We install all the dependencies that are needed by our Django application based on the requirements.txt.COPY . /code/: We copy all of the source code from our local directory to the /code directory in the container.COPY wait-for-it.sh /wait-for-it.sh: We copy wait-for-it.sh bash script from our local directory (downloaded from the open source wait-for-it) to the /wait-for-it.sh directory in the container. This bash script is needed to make sure that the Django application container waits for the PostgreSQL database container to be online before it starts.RUN chmod +x /wait-for-it.sh: We change the permissions of the wait-for-it.sh bash script so that we could execute it.

After that, we need to define our docker-compose.yml on our project’s root folder to configure the multi-container Docker applications.

docker-compose.yml

version: "3.9"

services:

db:

image: postgres:13

environment:

- POSTGRES_DB=<database-name>

- POSTGRES_USER=<database-user>

- POSTGRES_PASSWORD=<database-password>

- POSTGRES_HOST=<database-host>

web:

build: .

command: bash -c "

python manage.py makemigrations &&

python manage.py migrate &&

/wait-for-it.sh db:5432 -- python manage.py runserver 0.0.0.0:8000"

volumes:

- ./wait-for-it.sh:/wait-for-it.sh

- .:/code

ports:

- "8000:8000"

depends_on:

- db

env_file: .env

In this docker-compose.yml, we tell the database container named db to use Postgres 13 images from Postgres Docker Official Images to build our image. Then, we define the environment variables that are needed for our database. For the Django application container, named web, we build the image based on the Dockerfile that we previously created. We just need to add some commands to make migrations and migrate our database, wait for our database on port 5432 to be online using the wait-for-it bash script, and then run our Django application on port 8000. The volumes part defines the directories that we use to be the volume data for our container. Don’t forget to define the depends_on part on the web container to tell that the web container depends on the db container. The last step is we define the env_file part to tell the container the name of our environment variables file that is needed for the web application.

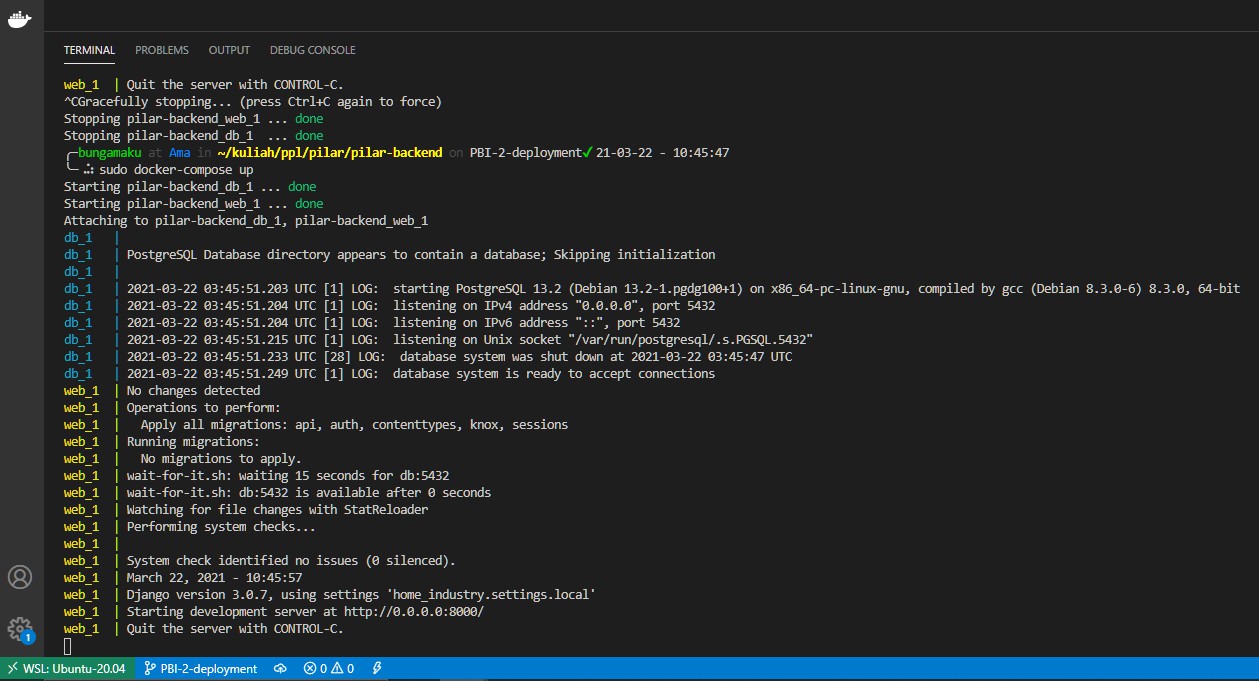

After that, we just need to create the environment variables file named .env on our project’s root folder and then run docker-compose with sudo docker-compose up to build our image, create the containers, and start the containers. If the container is running properly, it will looks like this:

Insdustri Pilar's backend running container

In another terminal, check the running container.

$ docker ps

To run collectstatic, run:

$ docker exec -it <web-container-id> python manage.py collectstatic --noinput

To create or update API configuration in database, run:

$ docker exec -it <web-container-id> python manage.py createorupdateapiconfig

To access the database, run:

$ docker exec -it <postgres-container-id> psql -U <database-user> -d <database-name> -h db

That’s it, we dockerized our Industri Pilar’s backend that uses Django and PostgreSQL using Docker Engine and Docker Compose! If you want to explore more about Docker, you can visit the Official Docker Docs.